All products featured are independently chosen by us. However, SoundGuys may receive a commission on orders placed through its retail links. See our ethics statement.

The 3 major problems with spatial audio technology

Published onMarch 4, 2025

You’ve probably heard of “Spatial Audio”, “Dolby Atmos” or “Immersive Audio” before, but do you actually use these features on a daily basis? I get the opportunity to try out hundreds of different headphone models each year with all the latest tech, and yet I’ve never found an implementation of spatial audio that I actually want to use. When we polled our audience last year, we found that the majority of people also do not use spatial audio.

If that’s the case, why are so many audio brands dumping millions of dollars into developing and promoting this technology? Does spatial audio have a future in the ears of the masses? To understand how we got here, and where the industry is headed, we need to dive into exactly how spatial audio works, from the music creation process down to how our brains interpret sound.

What exactly is spatial audio?

If you want a deep dive into the technology, you can check out our guide to everything you need to know about spatial audio in headphones. For the non-audio nerds, here’s the TL;DR:

- The shape of our shoulders, head, and outer ears impacts sound waves before they reach our eardrums, which helps our brain identify the location and direction of sound sources. This is known as the head-related transfer function (HRTF).

- “True” surround sound uses multiple speaker drivers in different locations, like a surround speaker setup in a movie theatre.

- “Virtual” surround sound applies a HRTF-based binaural algorithm to simulate having multiple speakers around you.

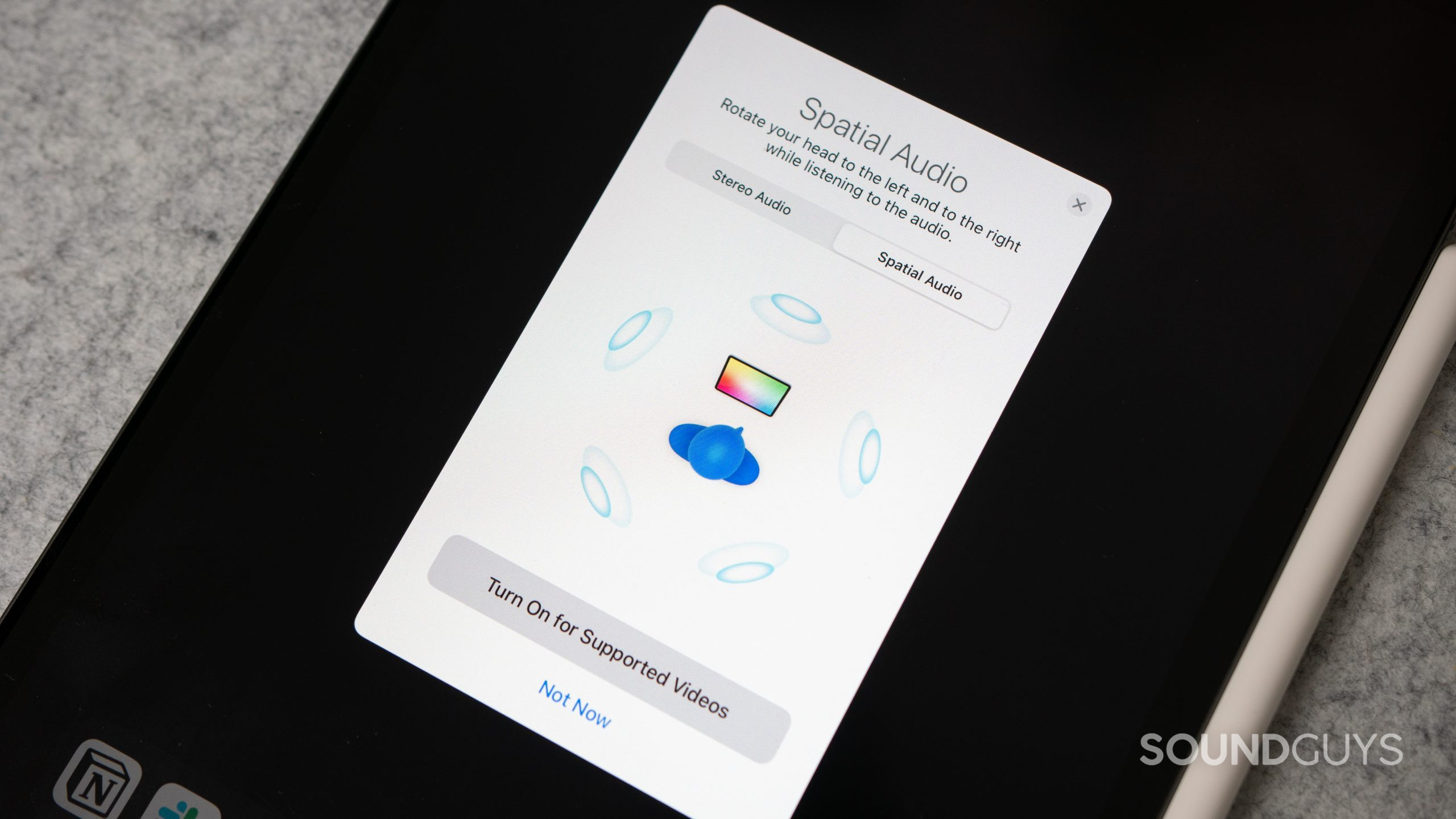

Spatial audio can be considered “virtual surround sound” because of how it replicates the experience of being surrounded by multiple audio sources. It can also involve using head-tracking sensors to lock the position of the virtual speakers in space while you turn your head around. You can learn more, and hear spatial audio demonstrations for yourself, in this SoundGuys explainer video:

Problem #1: Everyone’s ears are different

If spatial audio algorithms are developed using a head-related transfer function (HRTF), the question is: whose head are they using? If we know that the shape of our shoulders, head, and outer ears impacts sound waves before they reach our eardrums, then that means that everyone hears sound differently. Rather, our brains are trained to compensate for the shape of our shoulders, head, and outer ears to help us locate sounds. Biologically, it was important to locate sounds to know which direction an attacking tiger was coming from. Today, I just want my favorite Daft Punk tracks to sound good in my headphones. Either way, the shape of my head is different than yours, and thus, you can’t apply the same HRTF correction to both of our earbuds and get the same perception of space.

For example, here at the SoundGuys lab, we use a Bruel & Kjaer 5128 testing head. In developing the shape of the head and ear canal, the MRI scans of 40 different people were measured. That said, this is just an approximation of an average head, it doesn’t mean it matches up exactly with your particular head. If a testing head such as this one was used to develop a spatial audio algorithm, the effect will not perfectly match up with the way your ears interpret sound.

As technology has developed, there have been some improvements made in this regard. With the Apple Personalized Spatial Audio system for AirPods and Beats, you can scan your head using the TrueDepth camera on your iPhone. Sony also lets you upload photos of your ears to the Sony app to personalize the spatial audio experience of your headphones with Sony 360 Reality Audio. Neither of these solutions can truly replicate your HRTF though.

To accurately measure the effects of your HRTF, you need to insert microphones into your ear canal and measure a sound signal as it is played all around you from loudspeakers (binaural recording). The difference between the signal as it comes out of the loudspeakers compared to when it reaches your ear canal would measure the impact of the HRTF. This makes measuring individual HRTFs time-consuming and impractical outside of a laboratory setting.

Problem #2: Creating spatial audio music is a challenge

While spatialization algorithms can attempt to turn a stereo audio track into multi-channel spatial audio, this creates a distinctly different mix than what the artist originally intended. If you want to listen to your music the way the artist themselves created the track, then your favorite music artist needs to mix and master the track in spatial audio.

Enter Dolby Atmos.

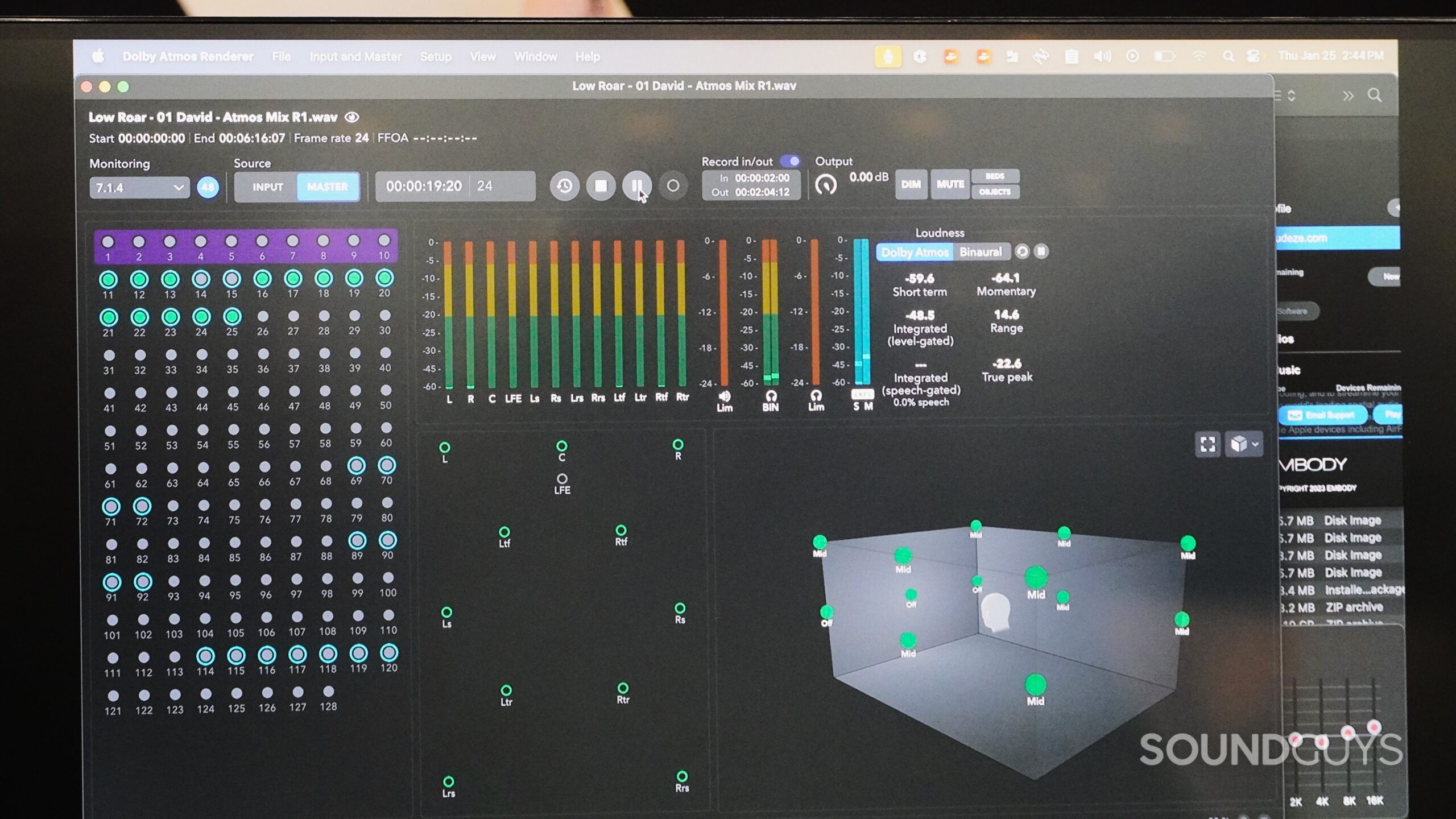

You can think of Dolby Atmos as a way of packaging audio. This means that the audio content needs to be packaged together in a special way using Dolby Atmos, and then the playback device needs to be able to unpackage the Dolby Atmos content for you to listen to in spatial audio. As you can imagine, this creates a lot of added steps in both the music creation process and the music listening processes.

Let’s start with the music creation process. Dolby Atmos uses object-based mixing, which means assigning sounds nearly anywhere within a virtual 3D space. This requires extra steps for the producer or mixing engineer to create a Dolby Atmos mix of the track. It also requires a special skillset, and a poorly mixed Dolby Atmos track will not sound good on any Dolby Atmos sound system, just as a poorly mixed stereo track doesn’t sound good on regular stereo setups.

In addition to the learning curve required to use the Dolby Atmos rendering software, it’s very challenging to create a proper Dolby Atmos mix without a very expensive music production studio. This runs counter-intuitive to the current trends in the music production industry. Unlike the days of old, chances are that most music you listen to today wasn’t recorded in some fancy million-dollar music studio, but rather in someone’s home studio, on their laptop. Music production software has come a long way, and many tracks with over a billion streams were produced by a single person, in their bedroom, on their laptop. For example, the Grammy award winning album “When We All Fall Asleep, Where Do We Go?” was produced by Billie Eilish and FINNEAS entirely in their bedroom studio.

In order to properly mix a Dolby Atmos track, you need a multi-channel surround sound speaker setup in a treated room. Producers can use Dolby’s binaural rendering tools to monitor Atmos mixes on headphones; however, headphone-based monitoring has limitations due to individual HRTF differences. When the sound bypasses parts of your body that can affect sound and goes straight into your ears, you’re missing out on the spatial localization cues your brain depends on.

Problem #3: No one can agree on a spatial audio format

Let’s say you go through the extensive process of mixing your music in Dolby Atmos. How do you get people to actually hear that mix? The platform that the vast majority of people use to listen to music is Spotify. As of writing, Spotify has no embedded support for Dolby Atmos mixes, or any other form of spatial audio. Ditto for YouTube. Until these major platforms embrace spatial audio, it won’t hit the mainstream.

But what about Apple Music?

When you dive into the Apple Music app, you can find a host of songs and playlists touting Dolby Atmos playback, but I was shocked to find out that this isn’t true Dolby Atmos, or at least not the same Dolby Atmos playback as other platforms. Dolby Atmos mixes on Apple Music are processed by Apple’s own Spatial Audio Renderer, which can sound very different from the original Dolby Atmos mix. For that reason, there is now a dedicated paid plug-in from Audiomovers for hearing how your Dolby Atmos sessions will sound on Apple Music specifically. The fact that a mixing engineer has to create a separate mix just for Apple Music is absurd, and adds another layer of complication to the music distribution process.

If you are listening to Dolby Atmos mixes on Apple Music, but are using earbuds such as AirPods, you likely won’t achieve as strong of a spatial audio effect as headphones. When it comes to localizing sounds in space, the general hierarchy is speakers>headphones>earbuds.

If you use AirPods or Beats with an iOS device, you can convert an incoming stereo track to surround sound using the Spatialize Stereo feature, but this can often significantly disrupt the way the mix sounds. Beyond Apple’s earbuds, I see lots of other earbuds and headphones released every year that feature some sort of spatial audio effect that you can turn on in the companion app, but I have yet to try one that sounds like listening to a proper surround sound speaker setup. Most recently, I tested the Marshall Monitor III ANC, which have a spatial audio feature called Soundstage mode. I found this feature sounded like my music was being drowned in reverb, rather than a proper surround sound mix.

With various spatial audio formats out there, such as Dolby Atmos, Sony 360 Reality, Apple Spatial Audio, Bose Immersive Audio, Marshall Soundstage, and more, there are steep barriers to creating, distributing, and listening to music in the same format. Moreover, all of these are touted as spatial audio, but can mean something different in practice, from emulating various rooms, using head-tracking to give the effect of speakers in front of you, to adding effects such as EQ and reverb.

Is Eclipsa Audio the solution?

Earlier this year, Google and Samsung announced a new open-source immersive audio format to compete with Dolby Atmos that they are calling Eclipsa Audio. Since Eclipsa Audio is open source and free, it removes many of the previous barriers to entry for music artists, manufacturers, and distributors. Samsung is enabling Eclipsa Audio across its 2025 TV lineup, while Google says artists will soon be able to upload Eclipsa Audio tracks to YouTube.

Could Eclipsa Audio become standardized across the audio industry? Maybe, but it’s going to take a long time before we get there. We could also end up in a situation where some products only support Dolby Atmos while others support Eclipsa Audio, which would create headaches for all. For now, spatial audio remains a niche feature with major hurdles in personalization, content creation, and distribution. If formats like Eclipsa Audio gain traction, we may see a more accessible and consistent spatial audio experience—but we’re not there yet.