All products featured are independently chosen by us. However, SoundGuys may receive a commission on orders placed through its retail links. See our ethics statement.

SoundGuys contents and methods audit 2022

March 28, 2022

Every year, we run down major changes, updates, and explain issues relating to coverage contained on SoundGuys. While it will be difficult to see with this particular audit, more has changed from 2021 to 2022 than in any other year at SoundGuys by a huge margin. Most of that is stuff we’ve done in the background that you won’t see, but we’ll run down the most important issues here.

Site overhaul

You may or may not be aware, but SoundGuys is a sister site to Android Authority. As a lot has changed both on the backend and in our day-to-day operations, it’s only fitting that we update our site’s frontend to reflect that. We’ve migrated to a new site design that will be much easier to read, much lighter on mobile browsers, and much prettier. Don’t worry, the experience will largely stay the same with the same features, it’ll just look a bit different.

Core functions of the site should remain the same, but as with any complete code re-do there may be some weirdness we miss before everything goes live. Don’t hesitate to send screenshots on Twitter to @realsoundguys if you run across a bug in the wild.

On the backend, our site should load faster for you, so fewer milliseconds of waiting for your content to get served up to your eyeballs. Additionally, no more ancient hodgepodge of themes to create odd artifacts, accessibility issues like too-light text, or that kind of deal. We’re also merging our test database and other goodies into the site itself, so eventually we’ll be serving up charts ad-hoc instead of as an image asset. It sounds like a little thing, but it opens doors for us down the road.

Expanding testing

Let’s face it: how people listen to their products varies as much as the headphones on the market themselves, and sometimes a test in ideal conditions doesn’t tell the whole story. Unfortunately a lot of the new tests we add tends to wind up getting collected, archived and then dusted off a year or so later once we have something substantial to add to our content.

Microphone testing

We’ve been slowly accumulating noise samples like a busy street corner, a subway, and an office environment. Basically, we’re starting to test how well the microphones in headphones are able to separate voice from noise and other distractions. You can see this in a couple places already, though we’re still figuring out what the best approach to present this is.

Additionally, we’ve standardized and upgraded our mic demo system. From our Technical Reviewer AJ:

We’ve made a big improvement to how we demonstrate the microphone performance of products we review. We now use a standardized test setup that plays back pre-recorded phrases from a calibrated artificial mouth in our test chamber, either with or without simulated background noises, simulated reverberant spaces, or artificial wind. This means that samples from every product can be directly compared, which makes it far easier to make meaningful comparisons between products in terms of the raw speech quality or the product’s ability to reject noise.

You can hear an example here, where we run through recordings with the Sony WH-1000XM3 in ideal and less-than-ideal conditions.

Sony WH-1000XM3 microphone demo (ideal conditions)

Sony WH-1000XM3 microphone demo (street conditions)

Sony WH-1000XM3 microphone demo (windy conditions)

We’ve also created the data visualization apps necessary to start creating microphone-appropriate plots (polar plots, etc.) for standalone microphones tested in the lab. While mic coverage will be slow, it will be more solid than it is now!

Speaker testing

Our speaker test setup is almost ready to start collecting data—I just have to figure out how to best mount lasers for alignment. The new setup will enable us to take a deeper look at speakers with our rig, and hopefully provide some more meaningful data to our readers on both Bluetooth and bookshelf speakers. Unfortunately, sound bars will still be a colossal pain to test due to their sheer mass.

Other stuff

As a part of sharing an office with the Android Authority folks, we have access to a Totalphase Advanced Cable Tester v2. Though it’s definitely not something that your average audiophile would care about, it is possible for us to kick the tires on common USB and HDMI cables to see if there’s anything in particular that should be avoided.

…I won’t be sticking coat hangers in there, I promise.

A note on reviews and scoring

A few people have pointed out what they believe to be shortcomings in our scoring, and we’ll be the first to tell you we’d love to do much, much more than we currently do. However, our philosophy on coverage paints us into a pretty tight corner, and many people disagree fundamentally with how we do things. That’s perfectly fine. However, we’re not changing our philosophy anytime soon.

We have to provide information for everyone and we can't cater solely to only one type of audio product user.

The long and short of it is that we have to serve as a repository for anyone to find information on a product, not just the people who like a particular target, have golden ears that AirPods actually fit into, or some other thing taken for granted that causes issues for a smaller segment of people. We have to provide information for everyone and we can’t cater solely to only one type of audio product user. That’s the complete opposite of what we’re setting out to do.

Overall scores

I’ve been doing headphone reviews for large outlets for over eleven years now, and trust me when I tell you I’ve heard every complaint possible with 10-point scale scoring. Unfortunately, the conclusion that I keep whacking into is that a single score is a grossly inadequate way of quantifying the overall value of a set of headphones—instead, it should be used as a pass/fail mechanism at most. One number does not communicate anything at all about individual aspects of a product’s performance, and drawing inferences from that number is putting the cart before the horse. Everyone wants “the best” things, but what “the best” is for one individual is a moving target—not something that can be divined by reading a number at the top of a page while ignoring everything that follows.

Before we threw the comments section into the dumpster, I spent hours a week deleting comments with “this thing is worthless, it’s not blue/wireless/in-ears/etc.” In the use case for those particular commenters, any score of a product outside of their desired feature set would have been a terrible way to assess whether they wanted that product or not, and nothing outside of the category they were looking at was helpful. No site can use an overall score and also predict deal-breakers for everyone. Static scores don’t work that way, and as reviewers we’re forced to come up with a system that works for our purposes.

Until there’s a purely reactive score scheme available, the overall score is a necessary evil. It’s required to rank for reviews in search engines, it’s a piece of reviews that people are trained to look for. There’s no good schema for overall scores simply because many different product types make tradeoffs to achieve things like a particular form factor, sound quality, wireless audio, and other features. There’s just bad overall scores and less-bad overall scores.

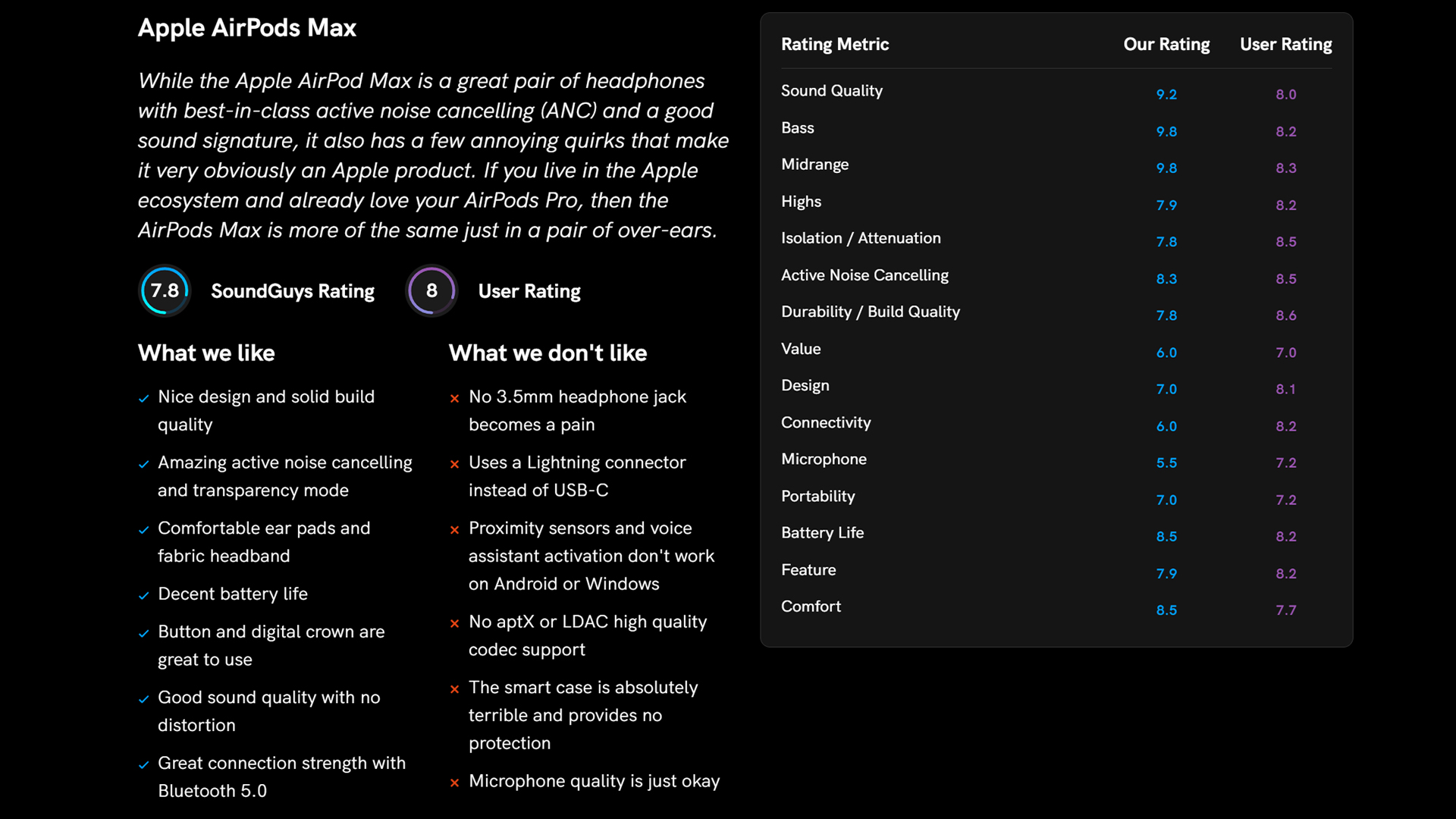

Sub-scores

To navigate this reality, we offer sub-scores that are very visible at the top of the page. Because not everyone is fully settled on a product type (eg, over-ear headphones, earphones, ANC headphones) when they start looking for their next audio product, we don’t give anything a pass for making a calculated tradeoff. For example, Joe Everyman may not understand that they don’t want AirPods for an intercontinental flight because the battery life just isn’t there. So we score true wireless earphones, ANC headphones, gaming headsets, and anything else that crosses our desks with the same formula for the same test. Unsurprisingly, companies hate this, and almost every month I’m accused of being unfair for not only comparing like to like products.

Using different scoring formulas for the same measure across several product types obfuscates how they compare.

The problem is that what a review site is tasked with doing and what a manufacturer is trying to do are wildly different things. SoundGuys has to provide information exclusively for readers of every preference, need, and budget—and we can’t make assumptions about how all our readers use the information we provide. In that light, using different scoring formulas for the same measure across several product types obfuscates how they compare instead of clarifying the issue. Sure, it’d be fine if you want to compare like things to like things, but the instant you stray from that you could be misled unintentionally.

Consequently, we’re not interested in hearing why it’s unfair that we score things like true wireless earphones’ battery life “poorly.” It’s not always obvious that something isn’t great from a review, and the quickest way to show people that is to provide a meaningful comparison—for example, with a score that treats all products the same way. We absolutely want it to be blisteringly obvious whether or not one product is better at battery life than another, and so on. In that light, it is exactly fair to get a little rough with the scoring.

Reader scores

In order to provide more experiential data, we give readers the ability to score products they own or have tried. We also prominently feature these scores right next to our own scores in hopes that people don’t simply take just our word for something—there’s a level of consensus built into the scores shown at the top. Certainly, the less popular a product is, the lower the quality of user ratings. However, it’s how we get around the fundamental disconnect between our philosophy and interested buyers’ needs: people who were looking for that product have a constellation of wants potentially satisfied by that thing, and those that own said product can use our scoring sliders to tell other interested people how they like it.

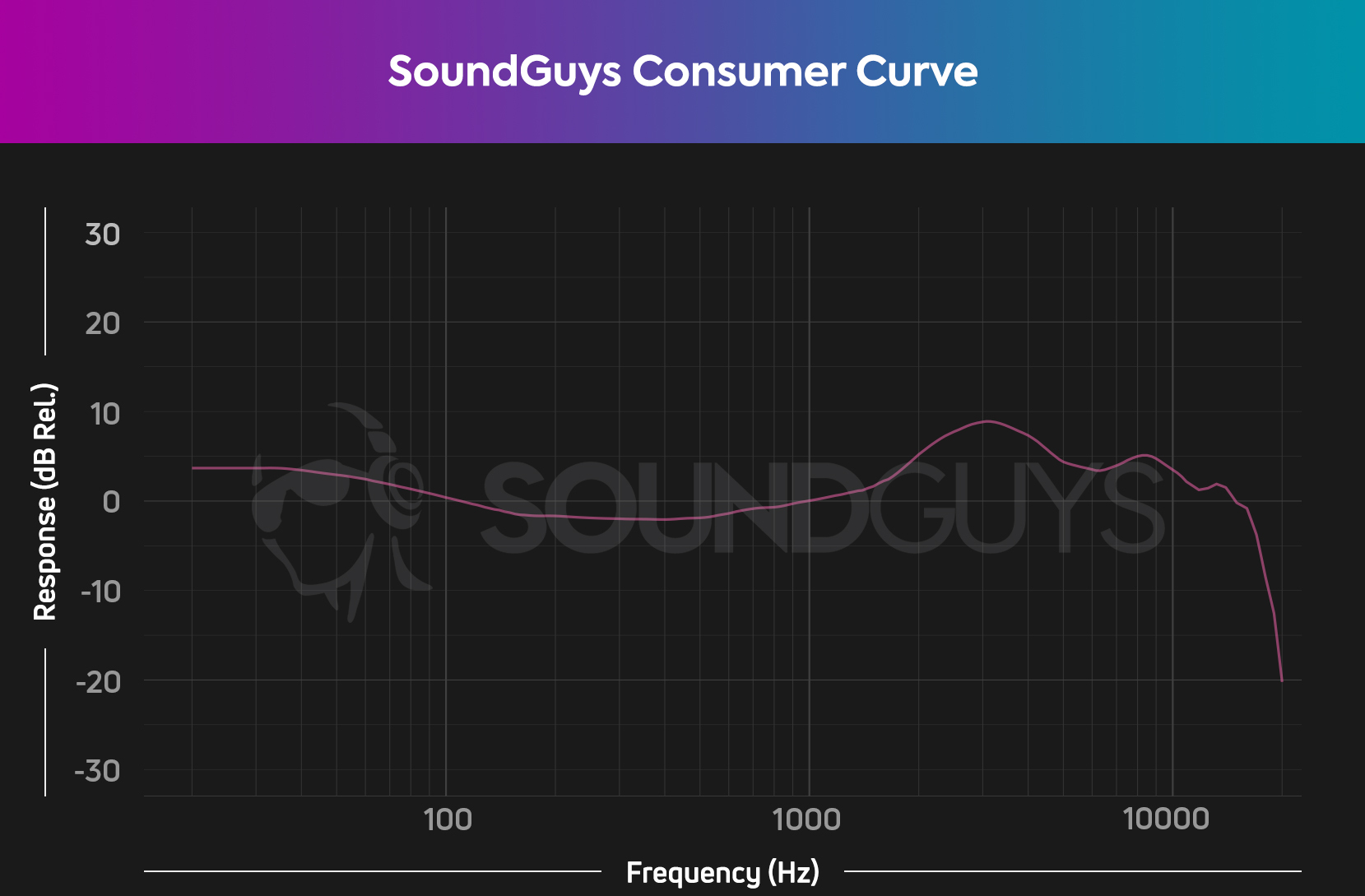

A note on the Bruel & Kjaer 5128

In addition to the scoring situation mentioned above, I got into it last year with a number of other people over the Bruel & Kjaer 5128. While we don’t really keep tabs on other sites’ hardware, a number of discussions have arisen online that call the use of different test heads into question, but rest assured we’re happy that we chose the best one for us.

While it’s tempting to spill a bunch of words hashing this argument out, I did a pretty good job back in 2021. And after meeting the necessary conditions we laid out, I’m confident in continuing our measurements as we have been. After all, we’re most interested in how a human is going to interact with a product, so if earbuds are tough to fit: we want to know. If sound quality changes when you have a tough time getting the right fit with headphones: we need to know. So we’re a little different.

In a presentation late in 2021, a number of quirks were discussed about the test head’s performance in relation to competing units. Unsurprisingly, a test head with a more anthropomorphically-accurate ear canal that better represents the acoustic impedance of a human ear didn’t quite match up with older ones with a more basic design. While we could go back and forth about this, we had to make decisions, and we moved to make our own preference curve for headphones drawing upon past research as well as using benchmark products to guide what we want to look for.

For the moment it seems as if it’ll take a while to see an official version of the Harman Target for the B&K 5128, but as always we’re going to press on with the best available information to us. Considering that our bespoke plotting software can ingest any number of targets for automatic chart creation/error analysis, we’re excited to see how things shake out in the coming years. If we update our targets, all we have to do is dump it into our database and we’re good to go.

A note on verbiage in reviews

As SoundGuys gets older and our coverage expands, we’re in a much different place than we were when I started working here years ago. Despite our best efforts to avoid situations where we may confuse readers, we painted ourselves into a bit of a corner with no good way out when we jettisoned all confusing language surrounding discussing sound quality.

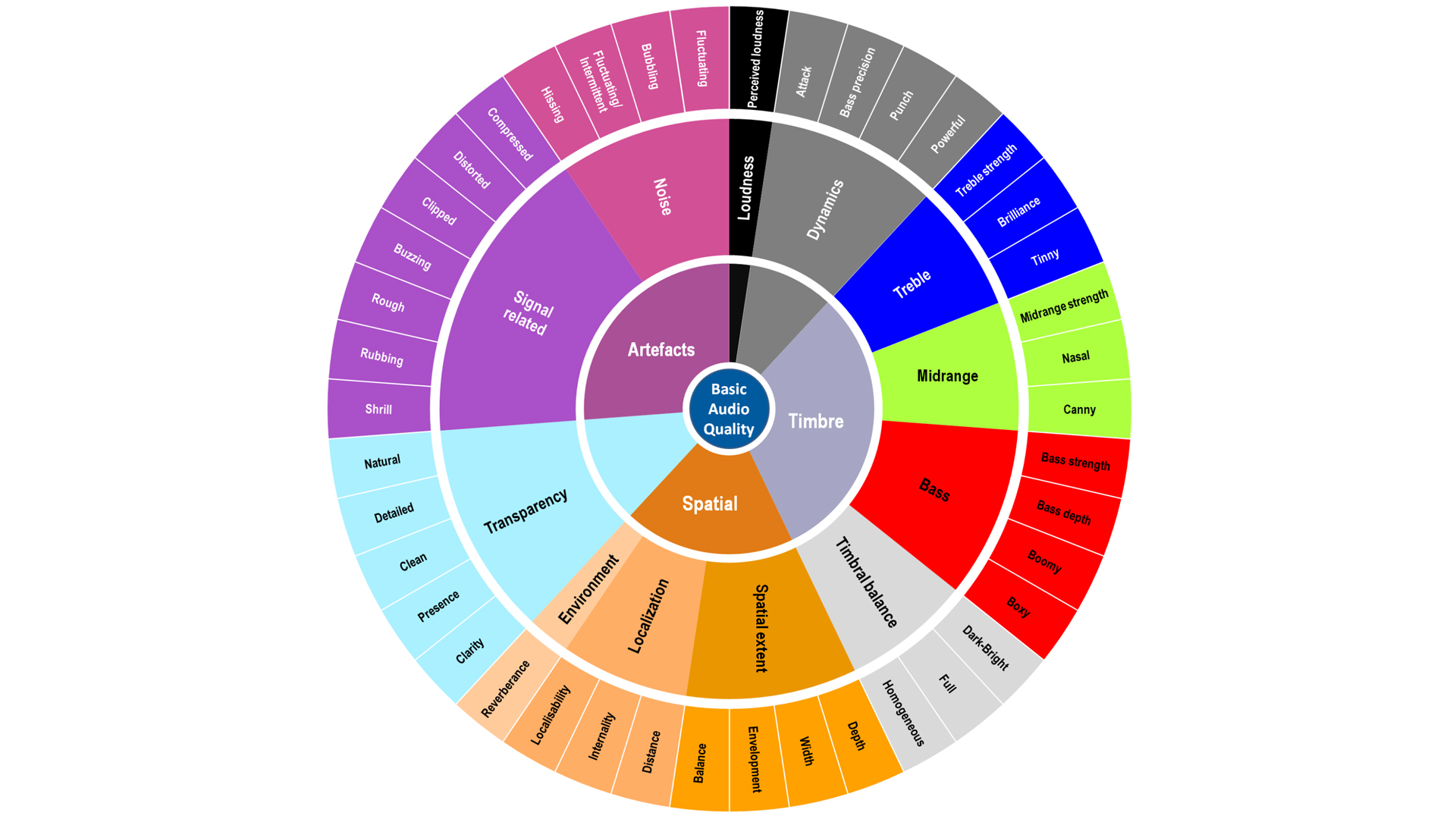

If you’ve read headphone reviews at other sites, you may have come across some words that sound great, but seem to be there to add color or flair to a description rather than meaning. Believe it or not, that’s not always true—many of these descriptions actually do describe features of sound quality laid out by a professional standard called “ITU-R BS.2399-0.” It’s an expert consensus lexicon around things that definitely need an expert consensus lexicon, but it’s still not perfect. Because its purpose is exclusively for experts to discuss sound quality to the exclusion of everyone else, it could still cause more confusion than understanding among the uninitiated. After all, it’s not meant for this type of person to begin with!

The main shortcoming with using ITU-R BS.2399-0 to discuss sound quality is its use of some words with previously established meaning that don’t immediately confer an understanding to a naive audience of what they mean in the context of audio—and several others with insufficient distinction between different concepts. It’s extremely difficult to serve the needs of a novice reader while also using this lexicon because almost all of terms have very different understood meanings. Though most of the words used to describe things like signal-related issues and noise are easy to understand without a formal introduction to the standard, several others are… not. Things like describing bass as “boxy” or midrange as “nasal” won’t always be well understood by people wondering if they should buy one particular set of true wireless earphones. It’s just not in their lexicon.

When a term isn’t in someone’s lexicon, most won’t even look it up: they’ll attempt to figure it out by context clues—which doesn’t help here. Even if you were to try to look up one of the terms in the standard, it’s not always easy to find because these particular definitions can’t be found in a dictionary. Because readers are encountering words they already recognize in a new context, they’ll try to reconcile their existing understanding of the concept with this new use. Consequently, the logic errors of amphiboly and equivocation are virtually guaranteed among readers who aren’t familiar with the lexicon above, and are trying to determine what the heck they just read. For example: they may take “bright” to mean “good” and “dark” to mean “bad” when the intended meaning has to do with emphasis in bass and treble—neither good nor bad.

Loss of specificity in words can lead to really bad misunderstandings (think “flammable” vs. “inflammable,” “figurative” vs. “literal”), so we avoid it as much as possible. While I am no prescriptive grammarian, I don’t believe words used in unrelated contexts are the best choice in writing—nor do the largest search engines. Grammar isn’t directly policed by search engines, but bad grammar and technical writing mistakes are a strongly negative thing to have in a user experience, which does impact your ranking. Since the vast majority of anyone finding your site through search isn’t going to be at the same expertise level as someone who is familiar with the latest ITU standards, any conflict with someone’s understanding of a word is going to hurt in one way or another.

However, now that we have the ability to talk about many of these issues more directly, we need a linguistic mechanism to convey this info when we cover higher-end products. Consequently, we’ve decided to proceed with selected elements of this standard. We will be making a concerted effort to make sure everyone can understand exactly what we mean immediately and concretely—you should not be able to finish a sentence that we’ve written using one of these terms without being shown what it means. Terms like “balanced” and “full” have already been misused to the point of meaninglessness, so we won’t be using those, for example.

Loss of specificity in words can lead to really bad misunderstandings... it's best to avoid that practice outside of slang or informal speech.

Luckily, we live in the future, and there are several ways we can make sure to convey the information we want to. In any section where we discuss sound quality, we’ll be enabling an automatic glossary so that a mouse-over of those words will display a definition and play an example of what we’re talking about so that there’s zero confusion. Nobody will have to look up terms or be confused by what we’re talking about, they’re right there for you to learn, with ample context nested in the widget, and as much explanation as you need buried in the site.

Should this impact our user experience on the site or prove unpopular enough, it may get abandoned—as our only goal here is to reach readers. If anything stands in the way of that, it tends to get the boot around these parts.

Thank you for being part of our community. Read our Comment Policy before posting.