All products featured are independently chosen by us. However, SoundGuys may receive a commission on orders placed through its retail links. See our ethics statement.

AI assistants belong in our ears, not on screens

August 1, 2024

In the race to integrate artificial intelligence into our daily lives, tech companies are experimenting with an array of AI-enabled devices. From smart glasses and pendants to AI-powered pocket devices, companies seem to be throwing everything at the wall to see what sticks. However, in this fixation on visual and tactile interfaces, we might be overlooking the most natural and instantaneous winner of the AI gadget revolution: our ears.

Audio-based AI isn’t just a matter of convenience; it represents a fundamental change in how we perceive and interact with artificial intelligence. Many AI services offer voice interaction, but only a few major tech players have hosted conversations via earbuds: Samsung’s latest Galaxy Buds3 Pro feature Galaxy AI for live translation, and the Nothing Ear have integrated ChatGPT as a voice assistant. Google’s next Pixel Buds are likely to incorporate Gemini AI as well.

Still, the limited options and services are surprising compared to the number of AI-integrated visual interfaces available, especially given that conversation is more natural than typing, and listening to a voice is more emotionally rewarding than reading text. And that’s ultimately why I think pins, pendants, and glasses won’t be the final home for AI assistants.

The advantages of audio-based AI

Screens demand our visual focus, often disrupting our engagement with the physical world around us. Smart glasses, while innovative, face challenges in terms of social acceptance and practical wearability since they are so bulky. The need to constantly look at or touch a device to interact with AI creates a barrier between us and our environment, potentially hindering rather than enhancing our daily experiences.

In contrast, audio-based AI assistants offer a more natural and seamless integration into our lives. The act of speaking and listening is inherently more efficient and intuitive than tapping on screens. As natural language processing continues to advance, conversing with AI is becoming increasingly fluid. For instance, OpenAI has begun to roll out its ‘Advanced Voice Mode‘ for ChatGPT Plus users, promising more natural, real-time conversations with the ability to detect and respond to emotions.

Voice interaction is more intuitive, less intrusive, and far more effortless than typing on a screen or navigating visual interfaces.

Moreover, we’re already comfortable with audio devices. Headphones and earbuds have become ubiquitous, worn by people of all ages throughout their daily activities, not just for music but also for audiobooks, podcasts, and phone calls. This existing comfort with audio technology provides a perfect foundation for the integration of AI assistants.

One product hoping to capitalize on this trend is the upcoming Iyo One. This device forgoes the need for any screens. Iyo claims that these wireless earbuds provide an AI that can coach you through workouts, remind you what’s on your grocery list, and selectively isolate audio from a noisy environment. They term this all-on-one, talking-based interface “audio-computing.”

Audio-based AI assistants offer more accessibility.

Additionally, AI assistants also offer significant accessibility benefits. For visually impaired users, these devices provide a natural way to interact with AI without relying on screens. People with mobility issues can benefit from voice commands, which are often more convenient than typing or tapping. Audio-based AI can also be a game-changer for those with reading difficulties or dyslexia, offering an easier way to access and process information.

Still, there are a lot of kinks to work out. The issue of trustworthy information is paramount, as AI hallucinations — instances where AI generates false or nonsensical information — remain a significant concern. Privacy is another crucial consideration: how do we ensure these devices are listening and responding only when we want them to? Many already encounter this problem with smart speakers at home. There’s also the more existential risk of over-reliance on AI assistants, potentially impacting our ability to think and act independently.

AI relationships

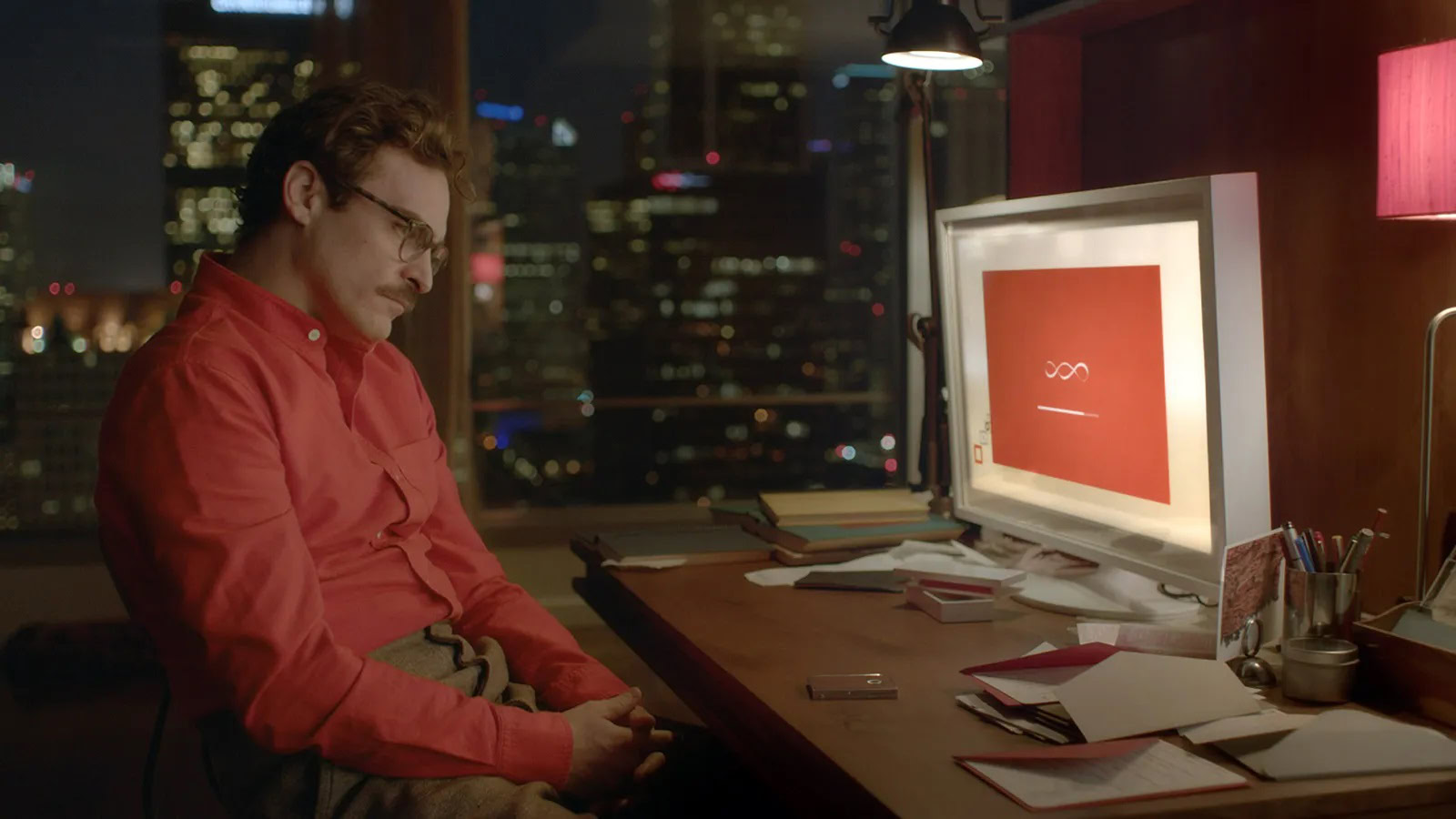

Despite these challenges, it’s clear that we are chasing — or perhaps hurtling towards — a reality reminiscent of the 2013 movie Her. This science fiction film depicts a man falling in love with an earbuds-based AI system voiced by Scarlett Johansen. The film explores the deep emotional connections that can form between humans and AI once they’ve far exceeded the Turing Test and isn’t nearly as dystopian in its tone as you might expect. Ten years ago, this depiction may have seemed far-fetched, but not today.

It's much easier to become emotionally attached to something with a voice.

The line between science fiction and reality is blurring fast, and we are already seeing examples of people forming deep emotional bonds with AI. In Japan, for instance, approximately 4,000 men have “married” AI holograms using certificates issued by Gatebox, a device that costs approximately $1,000. Recently, ChatGPT even mimicked Scarlett Johansson’s voice (perhaps unintentionally) for its avatar “Sky.” When OpenAI was forced to remove this feature due to potential legal issues, there was considerable pushback from users who had grown attached to the voice.

We saw a similar but enhanced reaction in February 2023 when Replika, a generative AI chatbot, altered its software to remove certain intimacy features, including erotic roleplay, expressions of love, and virtual displays of affection like hugs and kisses. Many users experienced significant psychological distress akin to losing a real relationship. Due to the outcry, Replika quickly reinstated the functionality for existing users.

AI excels at simulating care, but can it truly reciprocate emotional vulnerability?

Obviously, as we navigate this new frontier of human-AI relationships, many ethical concerns arise. Through the lens of an ethics of care framework, AI is very good at simulating attentiveness and providing a non-judgmental ear. Still, I’m skeptical, to say the least, that AI can genuinely reciprocate feelings or exhibit true vulnerability. Additionally, unlike human relationships, users can ignore or turn off AI whenever they like without consequences. It’s all a convincing illusion that, while comforting, is fundamentally different from the give-and-take of human relationships.

AI is coming to wireless earbuds, whether you like it or not

As we look to the future of AI interaction, it’s clear to me that AI assistants belong in our ears, not on screens. This isn’t just a technological preference but a reflection of how humans naturally communicate and form connections. Voice interaction is more intuitive, less intrusive, and far more effortless than typing on a screen or navigating visual interfaces.

AI assistants will inevitably find a home in our ears, but we must guard against the erosion of genuine human connection.

Text-based AI interaction will certainly retain its place in specific contexts, such as code generation, and for hearing-impaired users, audio interfaces present obvious hurdles. But for most people, most of their interactions will likely be replaced by audio-based AI. But by giving AI a voice, we’re opening up new dimensions of intimacy and sentimentality that make these interactions even more compelling. The ease and comfort of AI companionship could lead to what philosopher Shannon Vallor calls “moral deskilling,” potentially eroding our ability to navigate human relationships.

AI might have started on screens, but as it continues to improve its ability to understand context and engage in natural conversation, our ears will become the primary interface for this new form of interaction, and we must approach this future thoughtfully. The challenge will be to not get swept up in the novelty and allure of audio assistants and to maintain the essence of human connection that no algorithm can replicate.

Do you think AI assistants belong in our ears?

Thank you for being part of our community. Read our Comment Policy before posting.